Defense Unicorns UDS Registry Featured on DefenseScoop

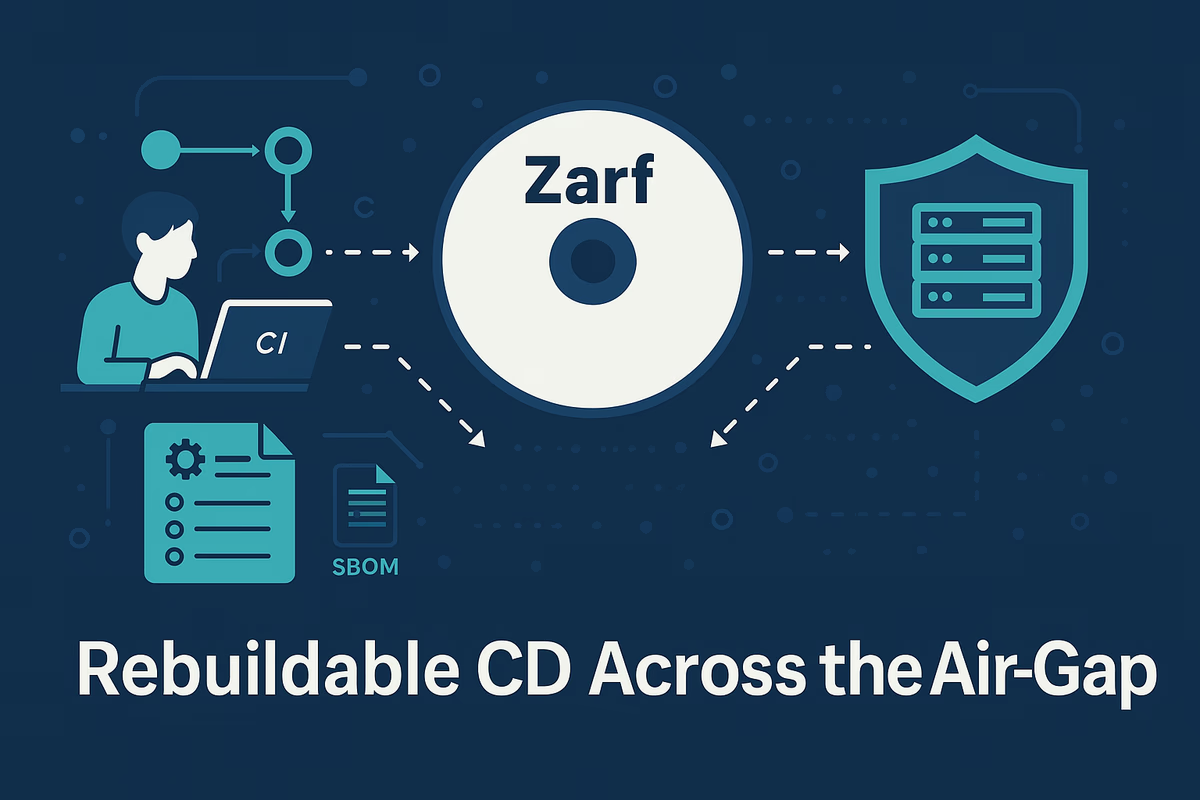

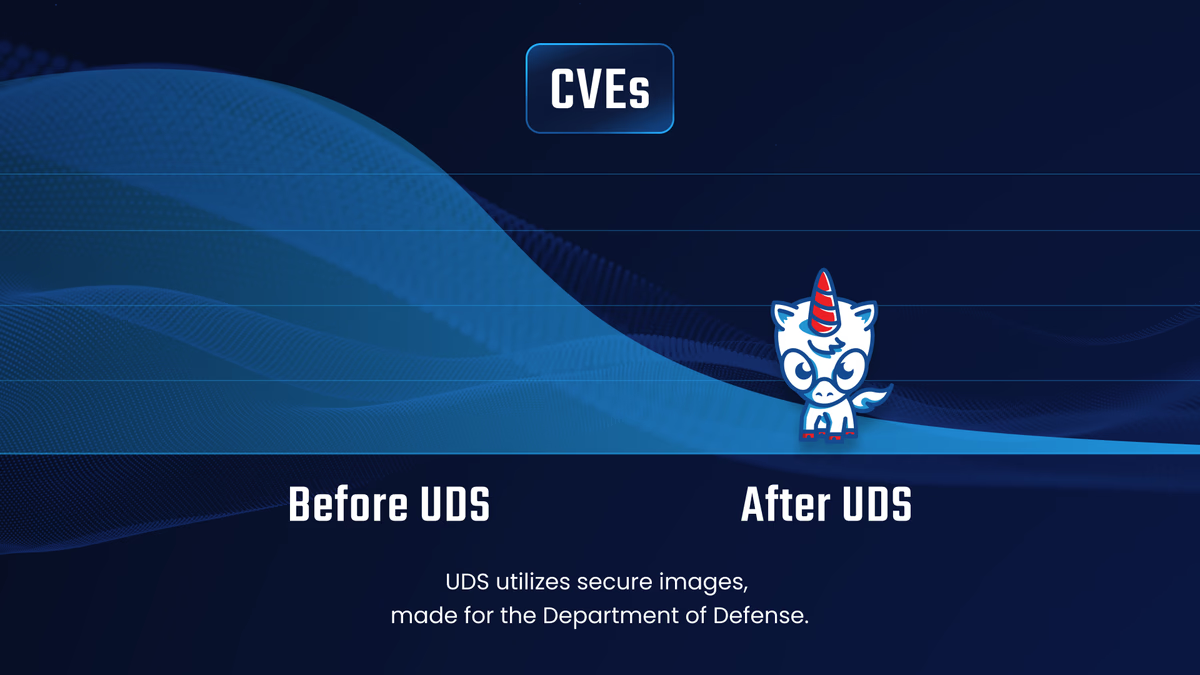

DefenseScoop highlights how the UDS Registry is transforming mission software delivery across the Department of Defense, featuring insights from CEO Rob Slaughter on secure, airgap-native software…